Virtual threads are the ideal mechanism for running mostly blocking tasks, providing a high level of concurrency without requiring asynchronous acrobatics from business logic programmers. I show that it is easy to configure Tomcat for virtual threads, provided one makes a small change to the Tomcat source code.

Java 19 has virtual threads as a preview feature, described in JEP 425. Virtual threads are scheduled to run in platform threads. When a virtual thread blocks, it is parked and another virtual thread can run in its place. Large numbers of virtual threads can run concurrently, provided that they mostly block. This workload is typical in web applications where requests spend much of their time waiting for responses from database queries or other external services.

Tasks for virtual threads can be written in synchronous style, expressing control flow with the standard Java language constructs: method calls (which may block), loops, and exceptions. This is in contrast to the asynchronous style with callbacks or chained completable futures.

For example, in an application that harvested images, I had the following asynchronous style code:

CompletableFuture.supplyAsync(info::getUrl, pool)

.thenCompose(url -> getBodyAsync(url, HttpResponse.BodyHandlers.ofString()))

.thenApply(info::findImage)

.thenCompose(url -> getBodyAsync(url, HttpResponse.BodyHandlers.ofByteArray()))

.thenApply(info::setImageData)

.thenAccept(this::process)

.exceptionally(t -> { t.printStackTrace(); return null; });

With virtual threads, I was able to rewrite it like this:

try {

String page = getBody(info.getUrl(), HttpResponse.BodyHandlers.ofString());

// Blocks, but with virtual threads, blocking is cheap

String imageUrl = info.findImage(page);

byte[] data = getBody(imageUrl, HttpResponse.BodyHandlers.ofByteArray()); // Blocks

info.setImageData(data);

process(info);

} catch (Throwable t) {

t.printStackTrace();

}

Which version would you prefer to write? To read? To debug?

I am guessing that most Java programmers prefer to use Java syntax for writing business logic. This is the principal benefit of using virtual threads.

I needed to test my modifications to Tomcat. I could have used a shell script or JMeter, but in the interest of practicing the use of virtual threads, here is a simple client. The client simply places requests and puts the results into a blocking queue:

var q = new LinkedBlockingQueue<String>();

try (ExecutorService exec = Executors.newVirtualThreadPerTaskExecutor()) {

for (int i = 0; i < nthreads; i++) {

exec.submit(() -> {

try {

String response = getBody(url + "?sent=" + Instant.now(), HttpResponse.BodyHandlers.ofString());

q.put(response);

Thread.sleep(delay);

}

catch (Exception ex) {

ex.printStackTrace();

}

});

}

}

This is standard concurrent Java code. There are only two differences with virtual threads:

Executors.newVirtualThreadPerTaskExecutor() instead of a thread pool.ExecutorService implements AutoCloseable, with a close method that blocks until all submitted tasks have completed.Each submitted task blocks on the calls to getBody, put, and sleep. In addition, execution blocks in the close method of the executor. I don't care. With virtual threads, blocking is cheap.

To try out the client, download code.zip, unzip and run (with Java 19):

cd code mkdir bin javac -d bin --enable-preview --release 19 src/webclient/*.java java -cp bin --enable-preview webclient.Main 100 10 https://horstmann.com/random/word

Install Tomcat. Set the CATALINA_HOME environment variable to the installation directory. Check that JAVA_HOME points to a Java 19 installation.

We will use a servlet that sleeps for a short while and reports a few data:

sent request parameter (which the client sets to the time the request was sent)doGet method was calleddoGet method finished sleepingtoStringHere is the code for ThreadInfo.java. You also need this web.xml file.

Compile and deploy:

javac -d web/WEB-INF/classes/ -cp $CATALINA_HOME/lib/\* src/servlet/*.java jar cvf ThreadDemo.war -C web . cp ThreadDemo.war $CATALINA_HOME/webapps/

To test, start Tomcat:

$CATALINA_HOME/bin/startup.sh

Then use curl:

curl -s "http://localhost:8080/ThreadDemo/threadinfo?sent=$(date -Iseconds)"

Or run the client:

java -cp bin --enable-preview webclient.Main 1

You should get a response that looks like this:

Sent: 2022-05-17T18:27:10.244228524Z Started: 2022-05-17T18:27:10.287297797Z Completed: 2022-05-17T18:27:11.304507264Z Thread[http-nio-8080-exec-2,5,main]

Now run 200 requests and note the time to completion:

time java -cp bin --enable-preview webclient.Main 200

For me, that took 3.6 seconds.

In Tomcat, you can plug in your own thread pool implementation. The documentation is murky on who uses the thread pool. If you use the default Http11NioProtocol, then that thread pool is used for the threads that service each request. That is the sweet spot for virtual threads.

There is a more modern Http11Nio2Protocol, which uses the pluggable executor for its internal threads, but uses a separate, apparently non-configurable, thread pool for the threads that service requests. I didn't pursue that further. In general, it doesn't make sense to replace all threads with virtual threads. You want to focus on those parts that run blocking workloads with arbitrary business that can benefit from the synchronous programming style.

The executor needs to implement this Executor interface. I copied the lifecycle methods from the standard executor and scheduled runnables on virtual threads in this VirtualThreadExecutor.java class. Here is the key point:

private ExecutorService exec = Executors.newThreadPerTaskExecutor(

Thread.ofVirtual()

.name("myfactory-", 1)

.factory());

public void execute(Runnable command) {

exec.submit(command);

}

This server.xml file defines the executor and puts it to use in each connector. Here are the entries that are modified from the standard configuration::

<Executor name="virtualThreadExecutor" className="executor.VirtualThreadExecutor"/>

<Connector port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

maxConnections="-1"

maxThreads="10000"

redirectPort="8443" />

Here are the commands for installing the executor:

javac -d bin -cp $CATALINA_HOME/lib/\* --enable-preview --release 19 \ src/executor/*.java jar cvf vte.jar -C bin executor cp vte.jar $CATALINA_HOME/lib/ cp conf/server.virtualthreads.xml $CATALINA_HOME/conf/server.xml

Restart Tomcat:

$CATALINA_HOME/bin/shutdown.sh JAVA_OPTS="--enable-preview -Djdk.tracePinnedThreads=full" \ $CATALINA_HOME/bin/startup.sh

Point your browser to http://localhost:8080. If you don't get the Tomcat welcome window, check that JAVA_HOME and CATALINA_HOME are properly set, and look inside $CATALINA_HOME/logs/catalina.out for clues.

Now run the web client again:

java -cp bin --enable-preview webclient.Main 1

Note that the thread was created by myfactory.

Run 200 concurrent requests:

java -cp bin --enable-preview webclient.Main 200

Whoa! 27 seconds. That's much, much worse than before! What happened?

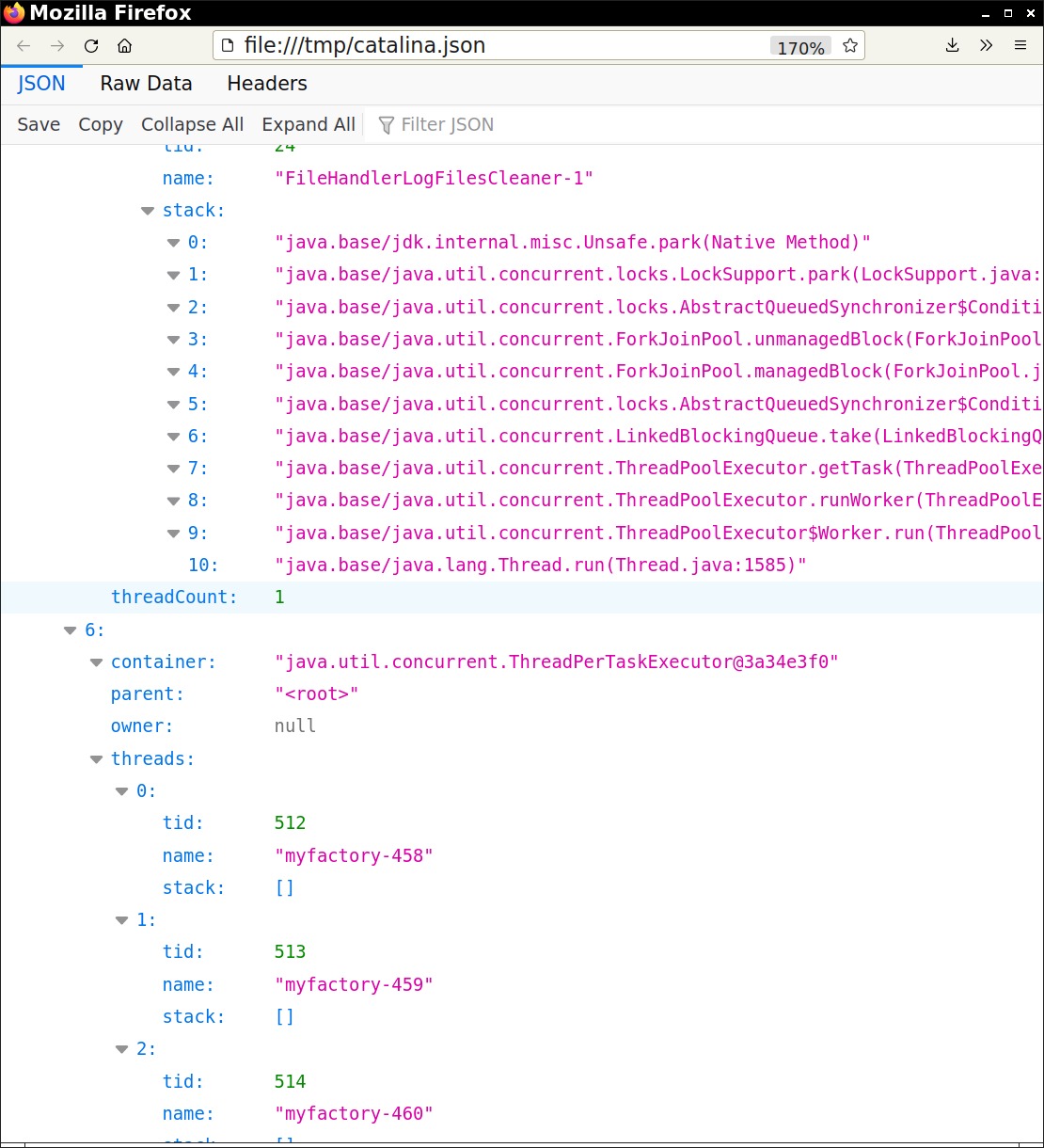

Looking at the timing data, we see that 200 requests are submitted at essentially the same time. What happens when Tomcat receives them? Let's resubmit and take a thread dump immediately afterwards:

jcmd $(jcmd | grep catalina | cut -d " " -f 1) Thread.dump_to_file -overwrite -format=json /tmp/catalina.json

Now look at /tmp/catalina.json. Firefox shows the JSON nicely.

There are about 200 virtual threads, many with no stack. They couldn't have finished, since otherwise we would have seen their output shortly after creation time. Something seems to block them between their launch and the start of the doGet method.

This could be deliberate throttling by Tomcat, but why doesn't the same throttling happen when one runs 200 requests with the default executor? More likely, the reason is pinning.

There are a few situations where blocking of virtual threads is not cheap:

synchronized).In these cases, the carrier thread blocks, and it can't run other virtual threads. It is pinned. If all carrier threads are pinned, no virtual threads can progress.

The second restriction should eventually go away, as the JVM is reengineered to better support virtual threads. But it is an issue for now. In your own code, you can avoid it by using a ReentrantLock or another mechanism. But if you work with third party code, this is more troublesome. For example, the Tomcat source has about 1,000 occurrences of the synchronized keyword, and you don't want to look at all of them.

The VM flag -Djdk.tracePinnedThreads will print a stack trace when pinning occurs. (If multiple threads pin for the same reason, there will be only one report.) See inside $CATALINA_HOME/logs/catalina.out, and you may see such a stack trace. Look carefully for a <== arrow such as this one:

... org.apache.tomcat.util.net.SocketProcessorBase.run(SocketProcessorBase.java:49) <== monitors:1 ...

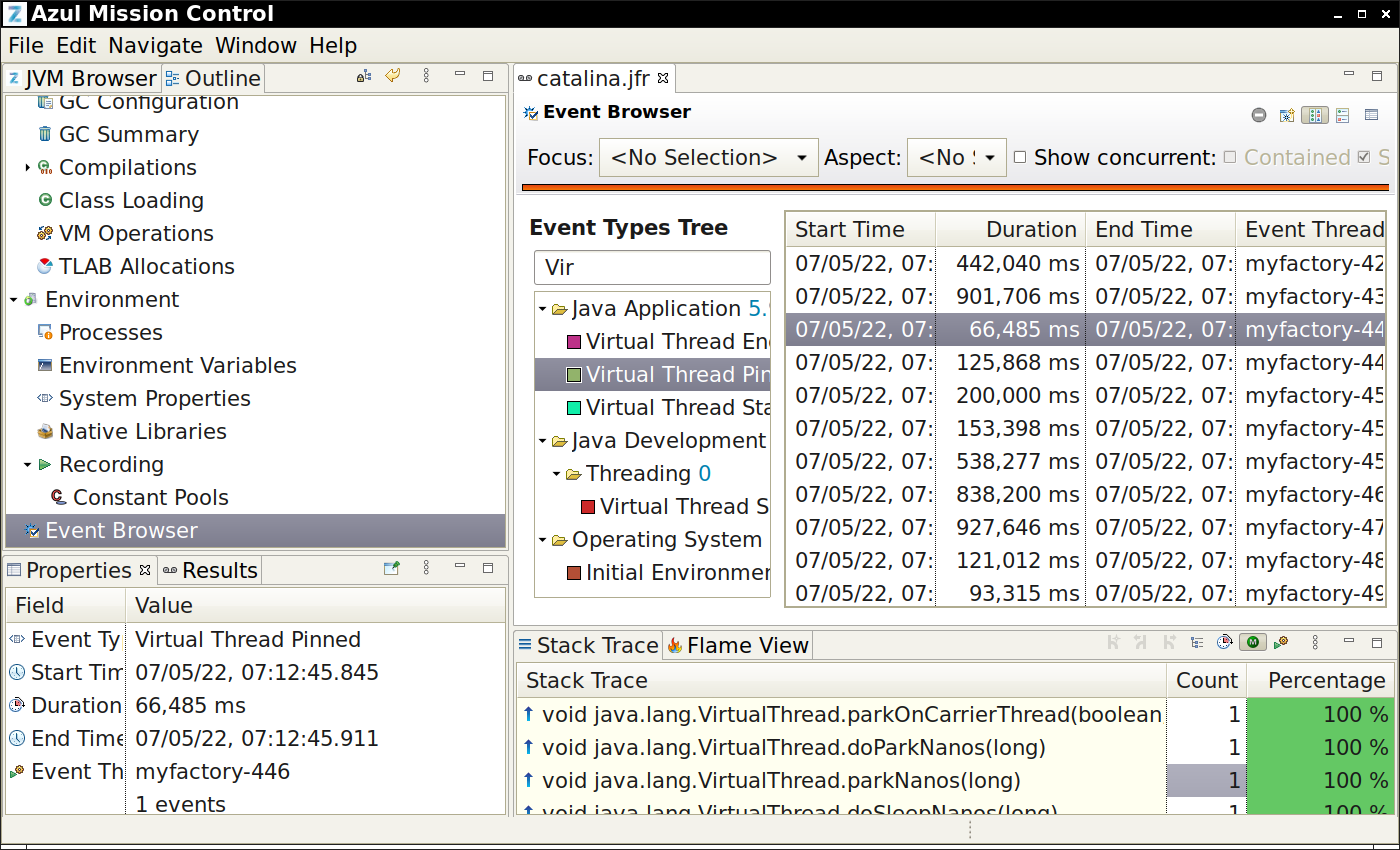

Alternatively, you can use Java Flight Recorder. Start a recording:

jcmd $(jcmd | grep catalina | cut -d " " -f 1) JFR.start name=catalina filename=/tmp/catalina.jfr

Launch 200 requests, and stop the recording:

jcmd $(jcmd | grep catalina | cut -d " " -f 1) JFR.stop name=catalina

Load the /tmp/catalina.jfr in your favorite Mission Control viewer. I used ZMC. In the left pane, look for Event Browser. Type Virtual into the Search the tree field. Then look for Virtual Thread Pinned.

Either way, you now have a stack trace where you can find the guilty party. Download the Tomcat source and go over the stack trace. In our case, the culprit is the following code in java/org/apache/tomcat/util/net/SocketProcessorBase.java:

public final void run() {

synchronized (socketWrapper) {

// It is possible that processing may be triggered for read and

// write at the same time. The sync above makes sure that processing

// does not occur in parallel. The test below ensures that if the

// first event to be processed results in the socket being closed,

// the subsequent events are not processed.

if (socketWrapper.isClosed()) {

return;

}

doRun();

}

}

I replaced that with

private Lock socketWrapperLock = new ReentrantLock();

public final void run() {

socketWrapperLock.lock();

try {

if (socketWrapper.isClosed()) {

return;

}

doRun();

}

finally {

socketWrapperLock.unlock();

}

}

To test this, make the source code change. Then:

cd /path/to/apache-tomcat-10.0.20-src ant cd - # back to the code directory $CATALINA_HOME/bin/shutdown.sh CATALINA_HOME=/path/to/apache-tomcat-10.0.20-src/output/build cp ThreadDemo.war $CATALINA_HOME/webapps/ cp vte.jar $CATALINA_HOME/lib/ cp conf/server.virtualthreads.xml $CATALINA_HOME/conf/server.xml JAVA_OPTS="--enable-preview -Djdk.tracePinnedThreads=full" \ $CATALINA_HOME/bin/startup.sh java -cp bin --enable-preview webclient.Main 200

With that change, 200 requests took 3 seconds, and Tomcat can easily take 10,000 requests.

When early versions of virtual threads appeared, everyone was excitedly running a million of them concurrently. But that's not what's exciting about them. You can have a million sleeping threads, but if they do any actual work, they will consume stack and heap resources. Loom does not mint transistors, and you are limited by the resources of the tasks that you run concurrently.

Assuming the tasks mostly block, you have enough resources for many more tasks than OS threads. And then the real advantage of virtual threads kicks in. You are freed from the tyranny of asynchronous style. No more carefully constructed pipelines of thenThis and thenThat and exceptionally, but simply loops, function calls, and exceptions.

That is particularly attractive for business logic which is notoriously fiddly and filled with edge cases that are hard to express with the methods that a reactive framework provides out of the box. And they are implemented by programmers who may not have the ingenuity and patience that those frameworks often require.

Those programmers will demand virtual threads from the providers of servlet runners and app frameworks. And it is not a hard thing to do. For the framework providers. They know where those thread pools are, and what code may need to be tweaked between the thread start and the service methods. You have just seen how to do that in a simple case.

Comments powered by Talkyard.